Constituting an AI: Accountability Lessons from a LLM Experiment

September, 2023

Hello! This post shares a recent working paper…

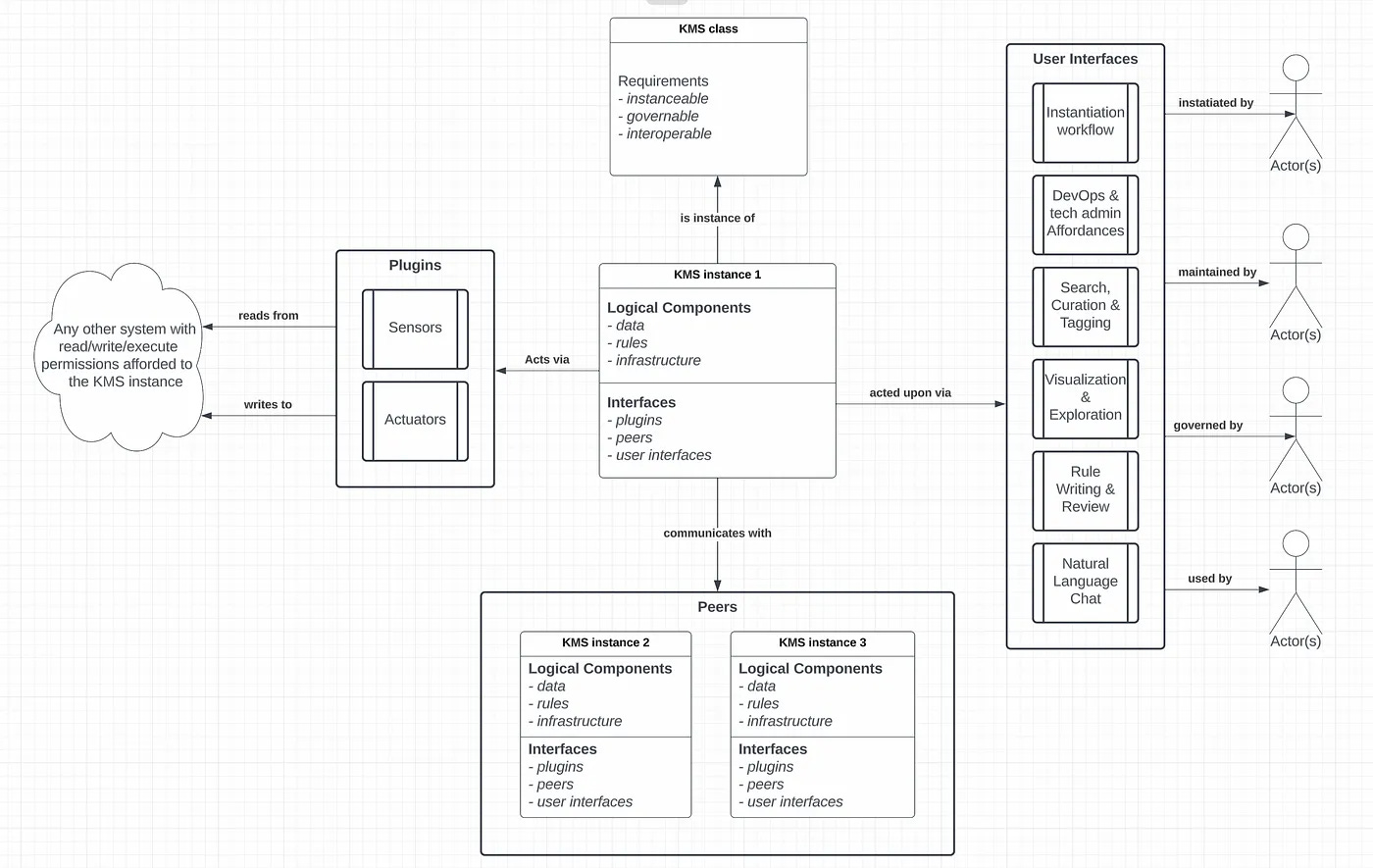

The paper explores an ethnographic study on an experiment conducted at an engineering services company called ‘BlockScience’. It focuses on the development and implications of 'constituting an AI' for accountability. The experiment integrated a pre-trained Large-Language Model (LLM) with an internal Knowledge Management System (KMS), making it accessible through a chat interface. The research offers a foundational perspective for understanding accountability in human-AI interactions with organisational contexts and suggests strategies for aligning AI technologies with human interests across various contexts.

Read on at:

Nabben, Kelsie, Constituting an AI: Accountability Lessons from a LLM Experiment (September 1, 2023). Available at SSRN: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4561433